|

Lue Fan (范略)

Assistant Professor

NLPR (模式识别实验室), Institute of Automation

Chinese Academy of Sciences

Email: lue.fan at ia.ac.cn

[Google Scholar][GitHub]

|

|

About Me

I am currently an assistant professor in NLPR, Institute of Automation, Chinese Academy of Sciences, working with Prof. Zhaoxiang Zhang. I got my Ph.D. degree from this lab in June 2024, supervised by Prof. Zhaoxiang Zhang, and bachelor's degree from Xi'an Jiaotong University (XJTU) in 2019, majoring in automation. I was a research intern at TuSimple, supervised by Dr. Naiyan Wang and Dr. Feng Wang. Currently, I am working closely with Prof. Hongsheng Li @ MMLab.

🎯 Our NLPR lab is actively recruiting interns and postdocs! If you are interested in Autonomous Driving / Embodied AI / Coding Agent, please feel free to reach out via email ( {lue.fan, zhaoxiang.zhang}@ia.ac.cn ).

News

-

[2025-11] Received the Outstanding Doctoral Dissertation Award of CSIG (中国图象图形学学会博士学位论文激励计划)

-

[2025-08] Received the Outstanding Doctoral Dissertation Award of CAS (中科院优秀博士学位论文)

-

[2025-07] Three papers accepted to ICCV 2025

-

[2025-02] FreeSim and FlexDrive accepted by CVPR 2025

-

[2025-01] LAW accepted by ICLR 2025

Selected Work

*: Equal Contribution; †: Corresponding Author

2026

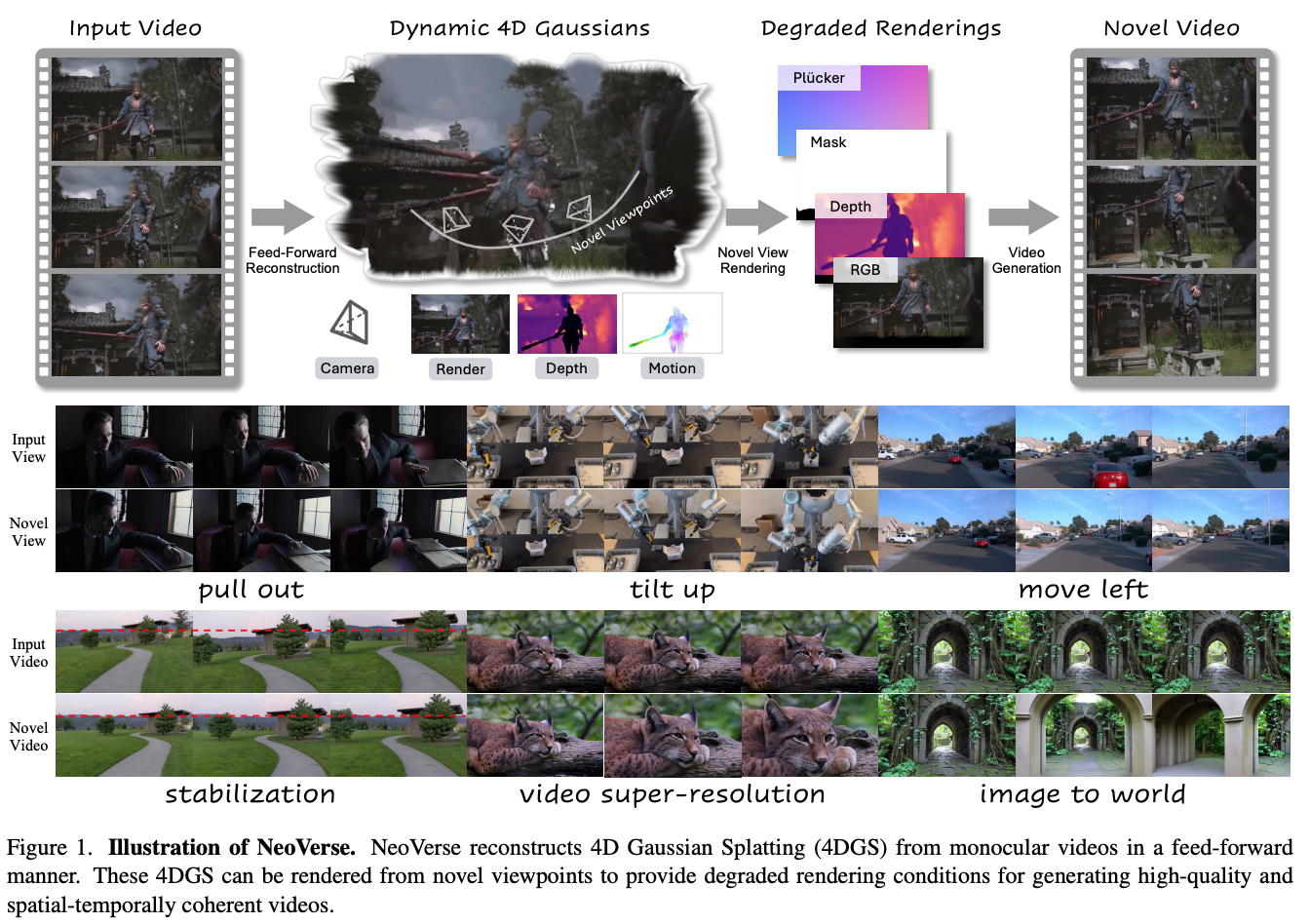

NeoVerse: Enhancing 4D World Model with in-the-wild Monocular Videos

Yuxue Yang,

Lue Fan†(project lead), Ziqi Shi, Junran Peng, Feng Wang, Zhaoxiang Zhang†

[

Paper][

Project Page][

新智元中文解读]

2025

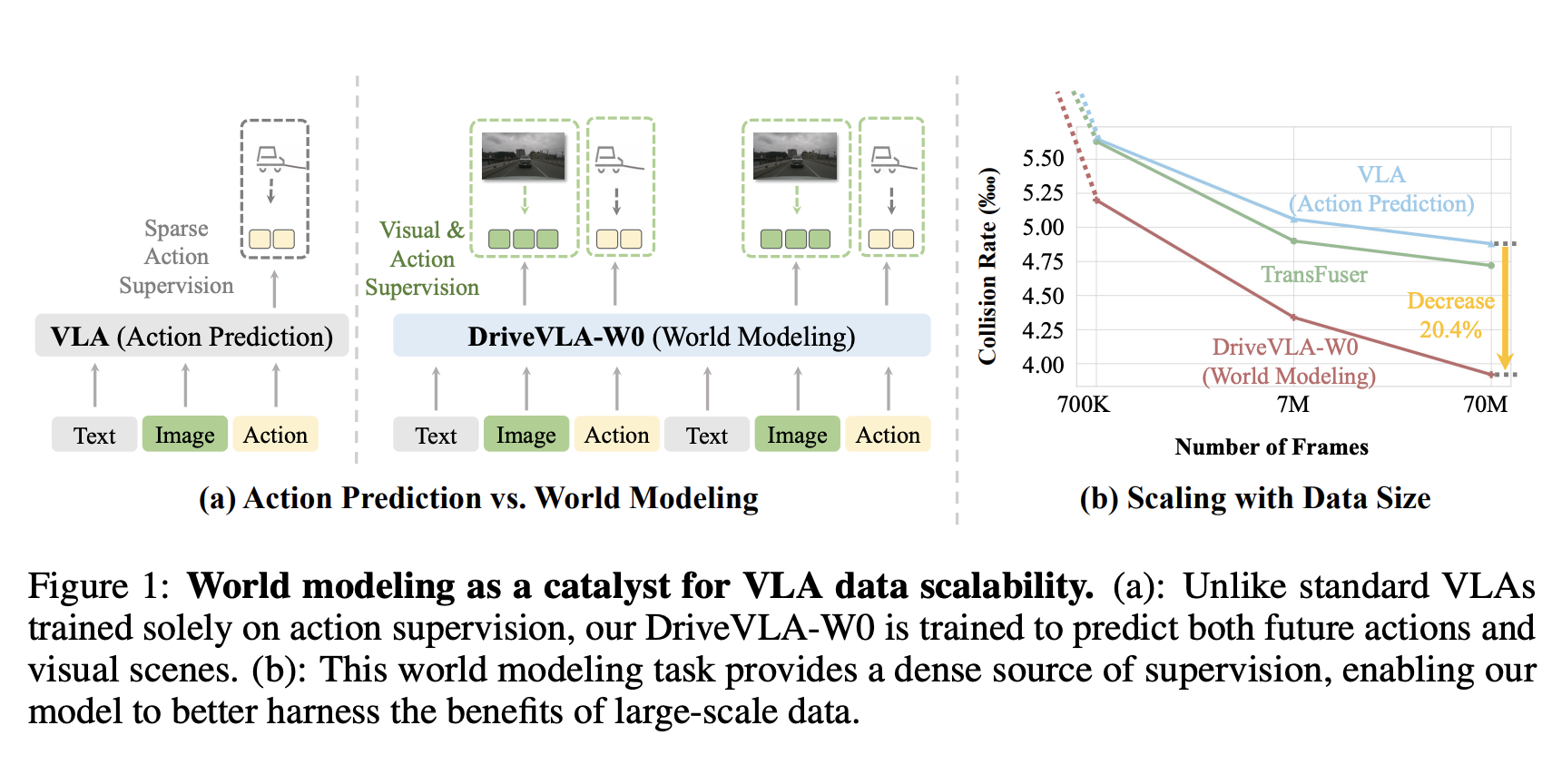

DriveVLA-W0: World Models Amplify Data Scaling Law in Autonomous Driving

Yingyan Li*, Shuyao Shang*, Weisong Liu*, Bing Zhan*, Haochen Wang*, Yuqi Wang, Yuntao Chen, Xiaoman Wang, Yasong An, Chufeng Tang, Lu Hou,

Lue Fan†, Zhaoxiang Zhang†

[

Paper][

Code]

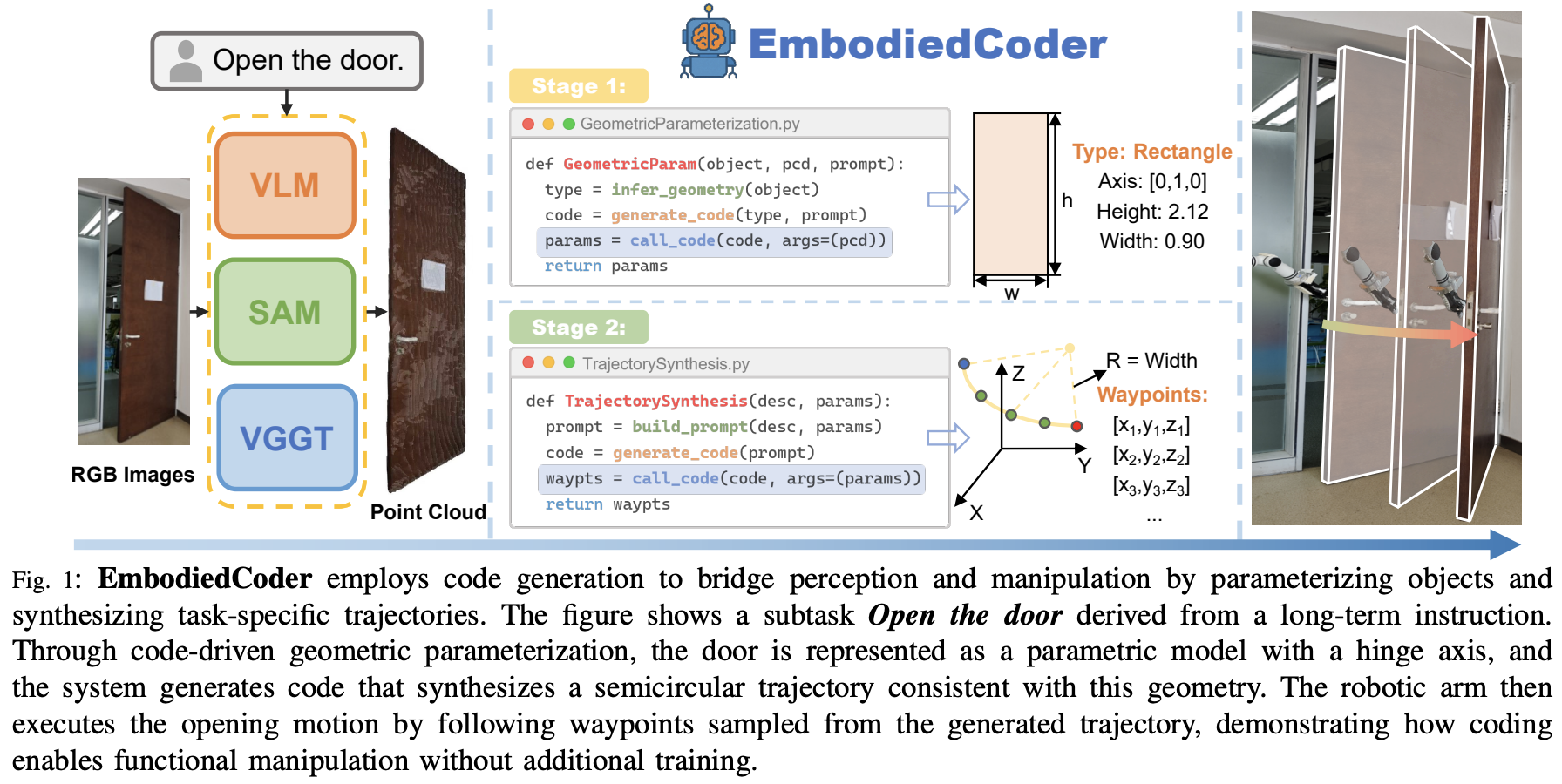

EmbodiedCoder: Parameterized Embodied Mobile Manipulation via Modern Coding Model

Zefu Lin, Rongxu Cui, Chen Hanning, Xiangyu Wang, Junjia Xu, Xiaojuan Jin, Chen Wenbo, Hui Zhou,

Lue Fan†, Wenling Li, Zhaoxiang Zhang†

[

Paper][

Project Page]

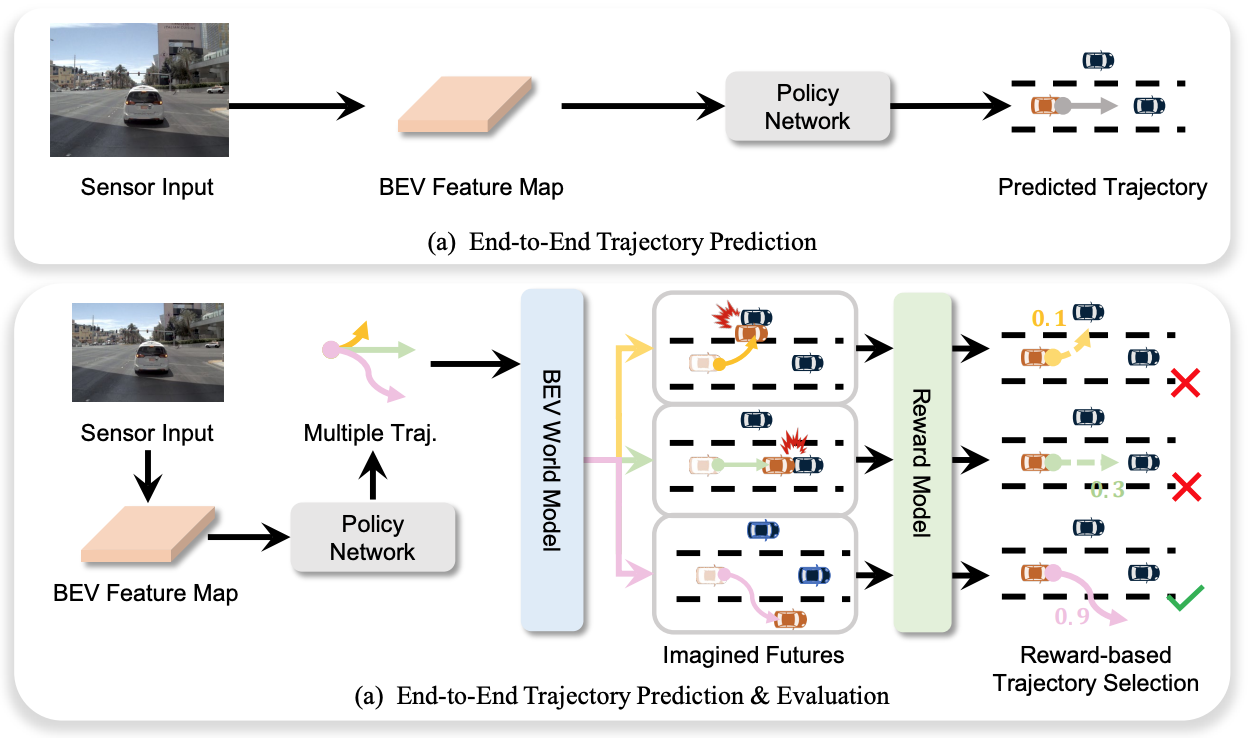

End-to-End Driving with Online Trajectory Evaluation via BEV World Model

Yingyan Li, Yuqi Wang, Yang Liu, Jiawei He,

Lue Fan†, Zhaoxiang Zhang†

ICCV 2025

[

Paper][

Code]

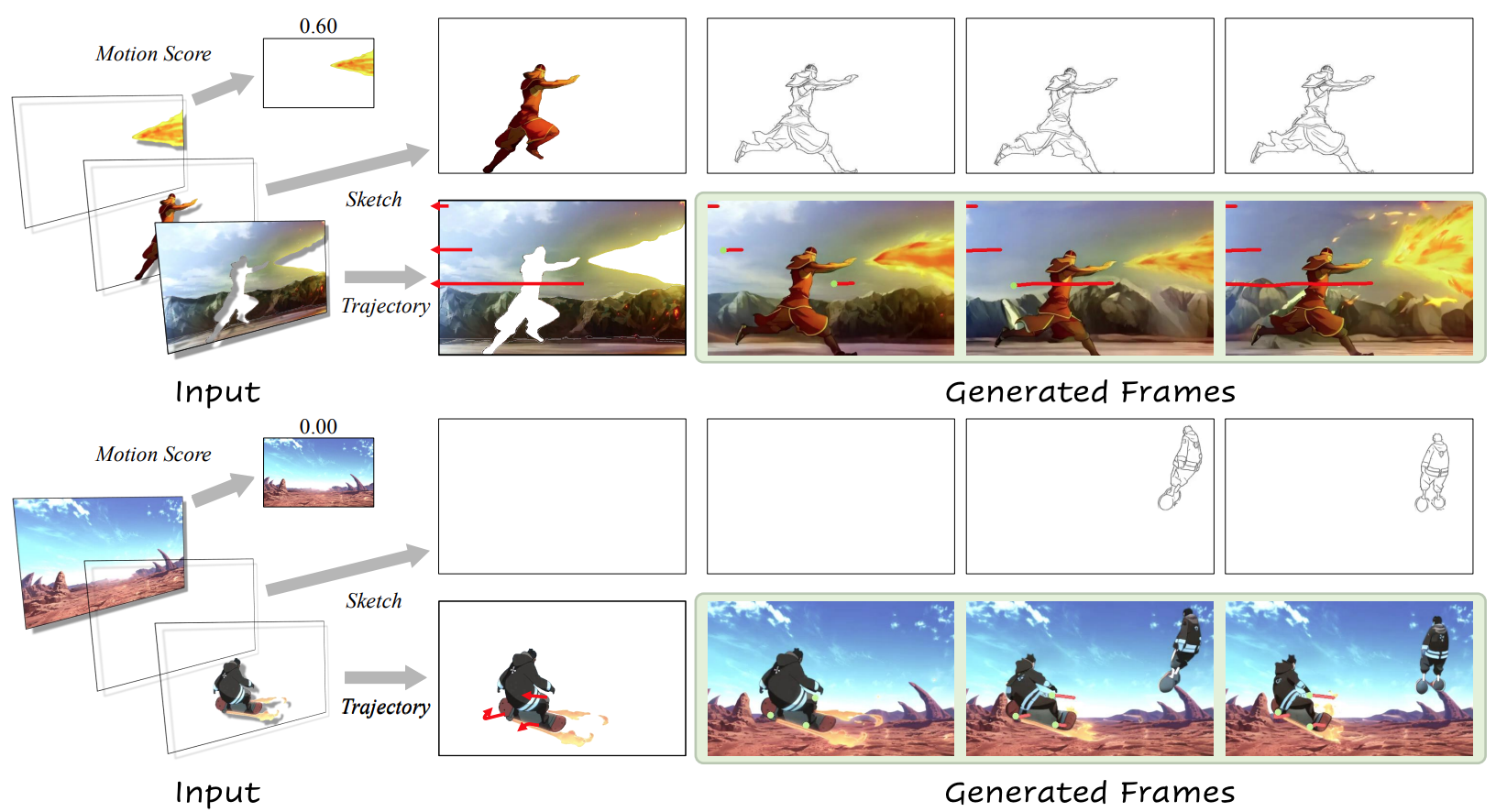

LayerAnimate: Layer-level Control for Animation

Yuxue Yang,

Lue Fan, Zuzeng Lin, Feng Wang, Zhaoxiang Zhang

ICCV 2025

[

Paper][

Project Page]

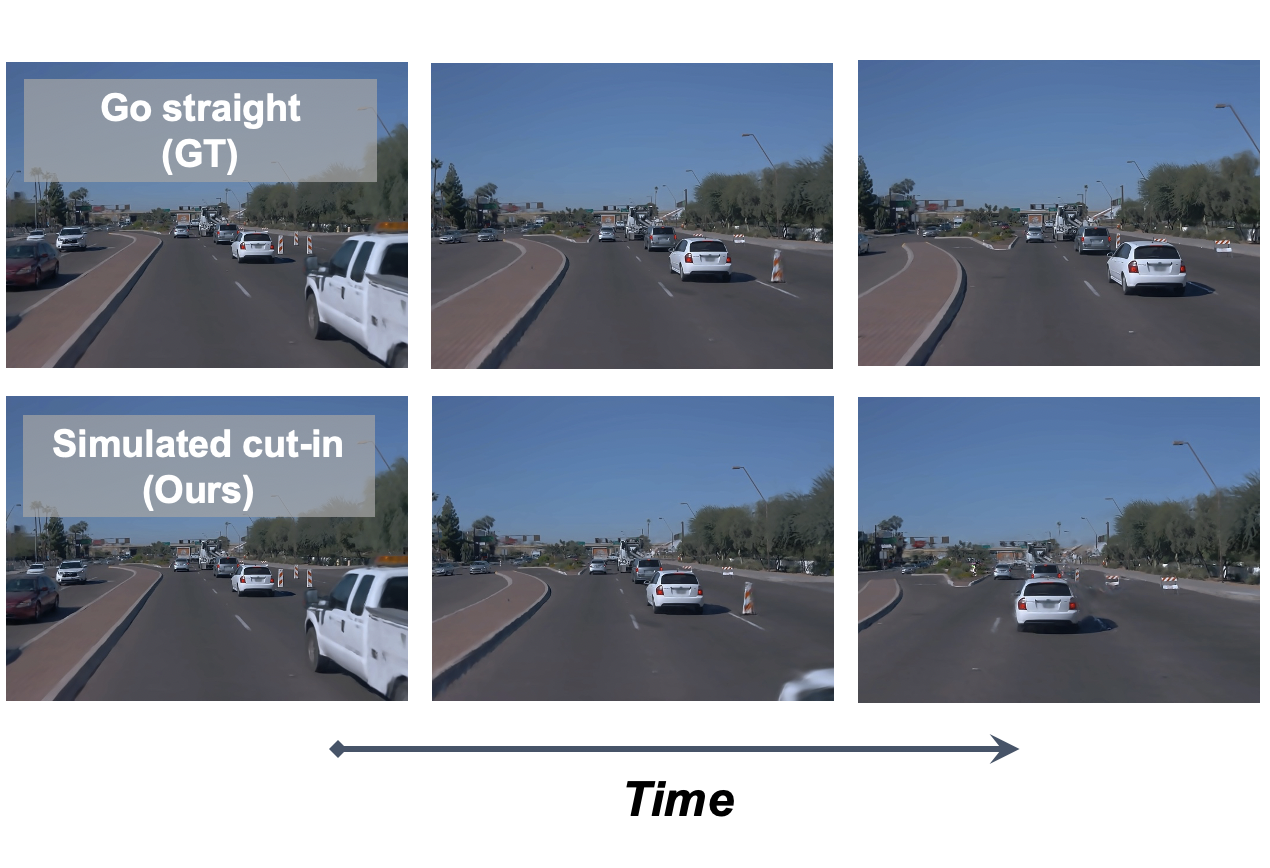

FreeSim: Toward Free-viewpoint Camera Simulation in Driving Scenes

Lue Fan*, Hao Zhang*, Qitai Wang, Hongsheng Li†, Zhaoxiang Zhang†

CVPR 2025

[

Paper][

Project Page]

FlexDrive: Toward Trajectory Flexibility in Driving Scene Reconstruction and Rendering

Jingqiu Zhou*,

Lue Fan*, Linjiang Huang, Xiaoyu Shi, Si Liu, Z. Zhang†, Hongsheng Li†

CVPR 2025

[

Paper]

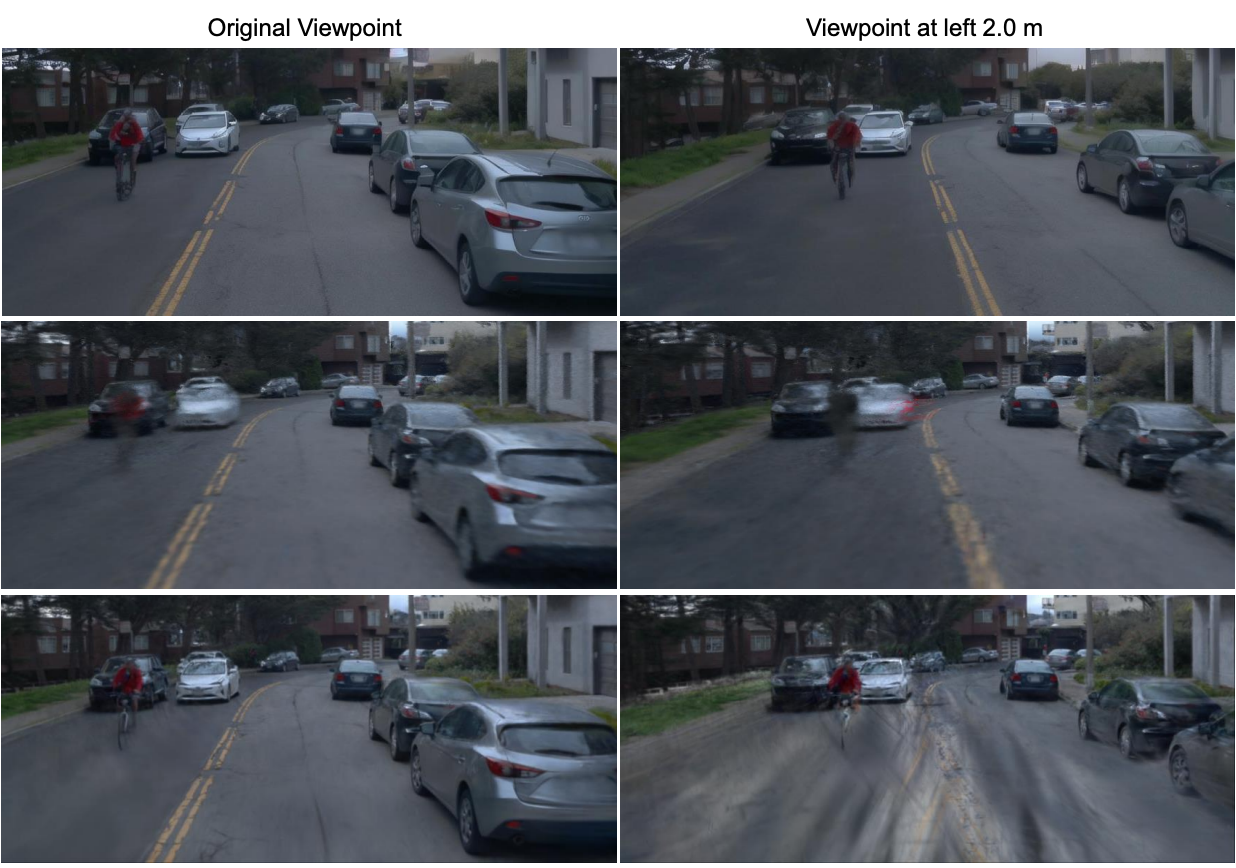

FreeVS: Generative View Synthesis on Free Driving Trajectory

Qitai Wang,

Lue Fan, Yuqi Wang, Yuntao Chen†, Zhaoxiang Zhang†

ICLR 2025

[

Paper][

Project Page]

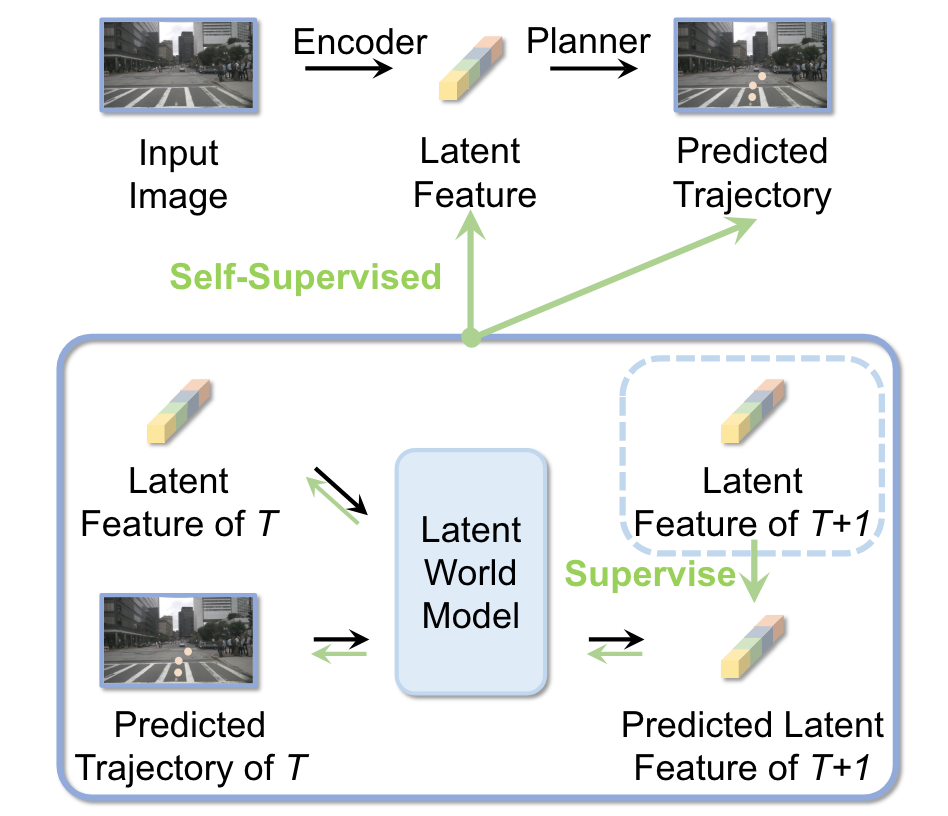

Enhancing End-to-End Autonomous Driving with Latent World Model

Yingyan Li,

Lue Fan, Jiawei He, Yuqi Wang, Yuntao Chen, Zhaoxiang Zhang, Tieniu Tan

ICLR 2025

[

Paper][

Code]

2024

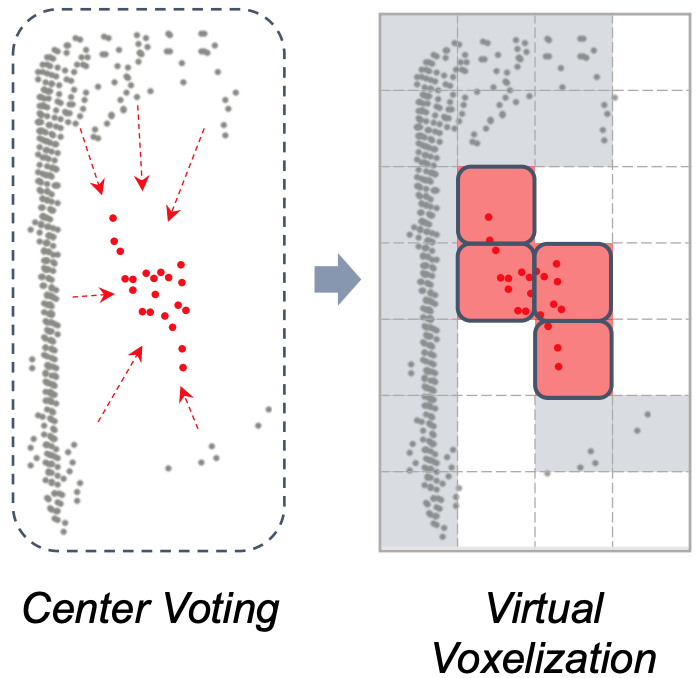

FSD V2: Improving Fully Sparse 3D Object Detection with Virtual Voxels

Lue Fan, Feng Wang, Naiyan Wang, Zhaoxiang Zhang

TPAMI 2024

[

Paper][

Code]

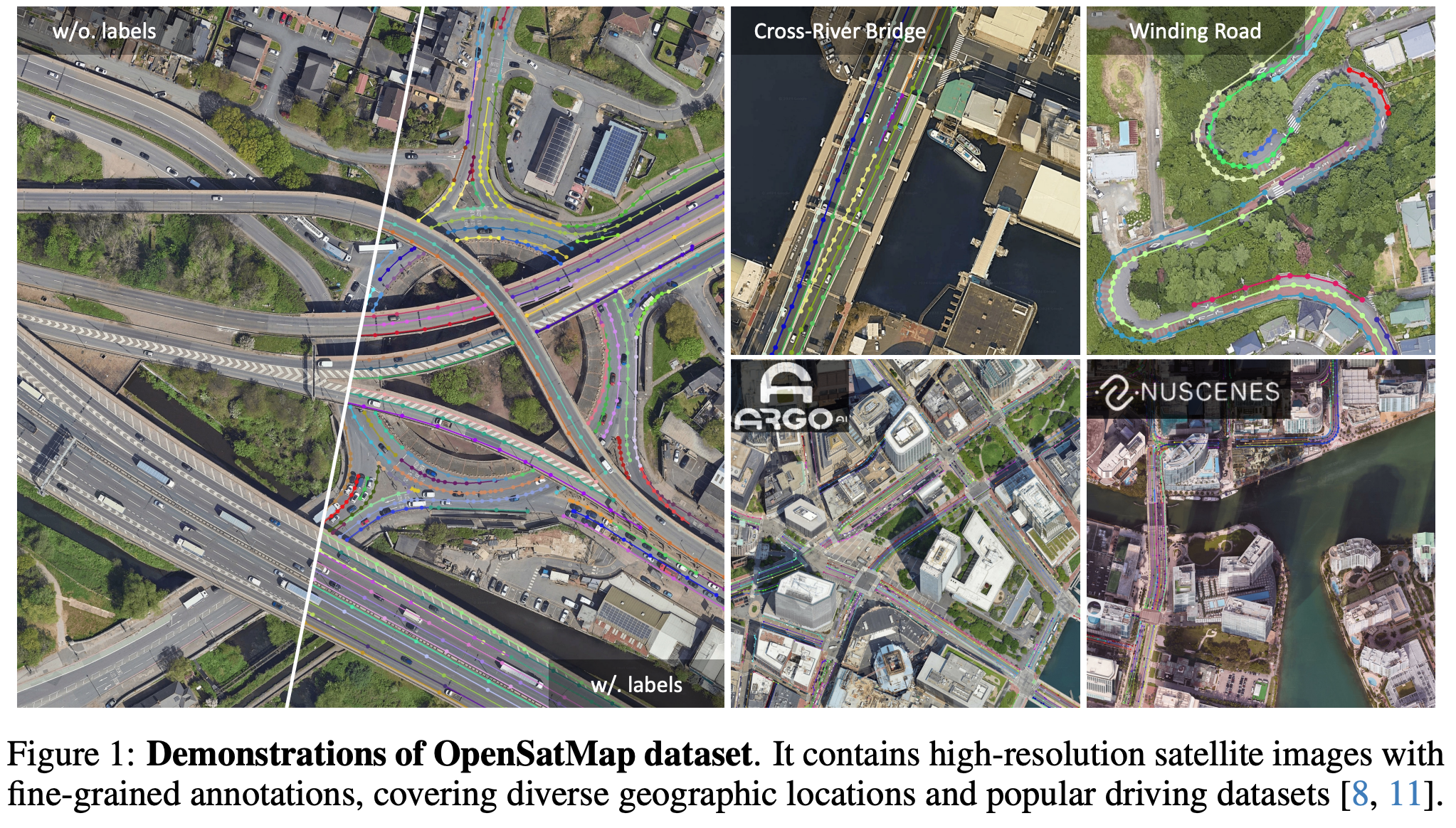

OpenSatMap: A Fine-grained High-resolution Satellite Dataset for Large-scale Map Construction

Hongbo Zhao,

Lue Fan, Yuntao Chen, Haochen Wang, Yuran Yang, Xiaojuan Jin, Yixin Zhang, Gaofeng Meng, Zhaoxiang Zhang

NeurIPS 2024 D&B Track

[

Paper][

Project Page]

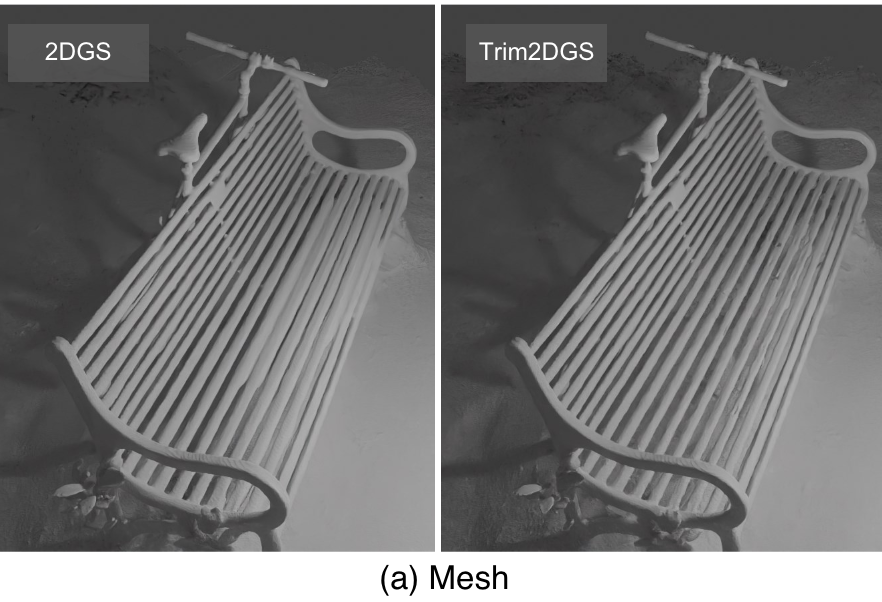

Trim 3D Gaussian Splatting for Accurate Geometry Representation

Lue Fan*, Yuxue Yang*, Minxing Li, Hongsheng Li†, Zhaoxiang Zhang†

[

Paper][

Code][

Project Page]

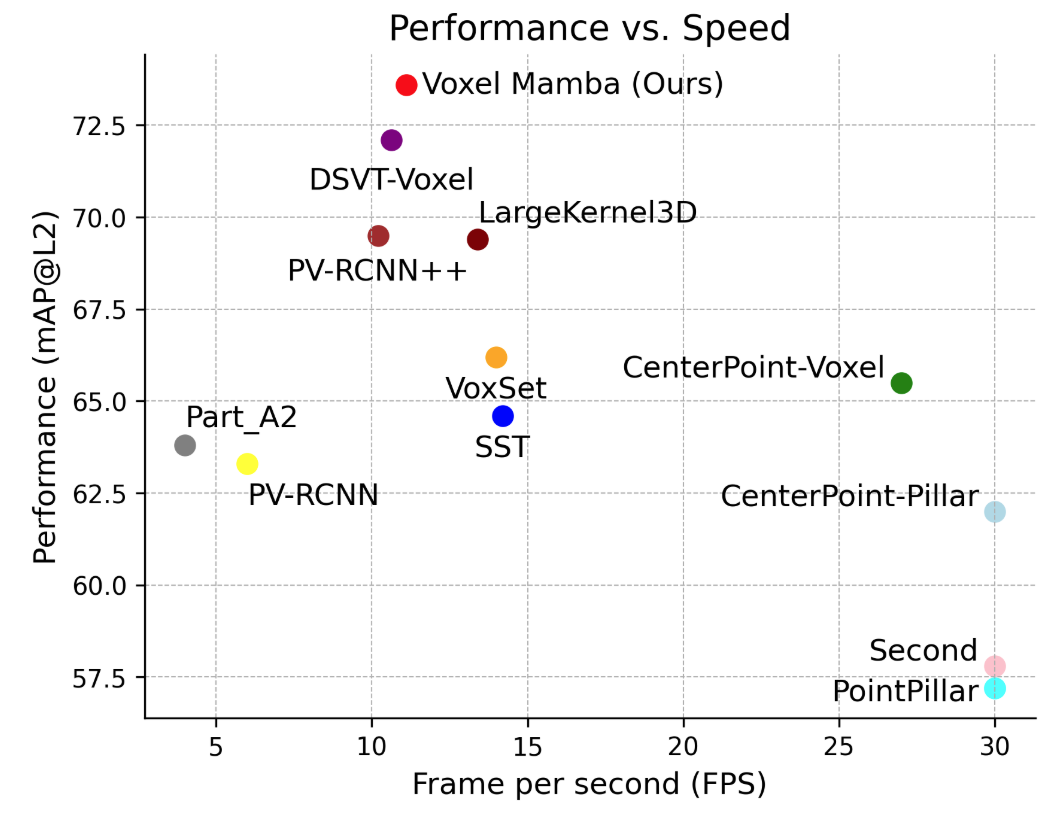

Voxel Mamba: Group-Free State Space Models for Point Cloud based 3D Object Detection

Guowen Zhang,

Lue Fan, Chenhang He, Zhen Lei, Zhaoxiang Zhang, Lei Zhang

NeurIPS 2024

[

Paper][

Code]

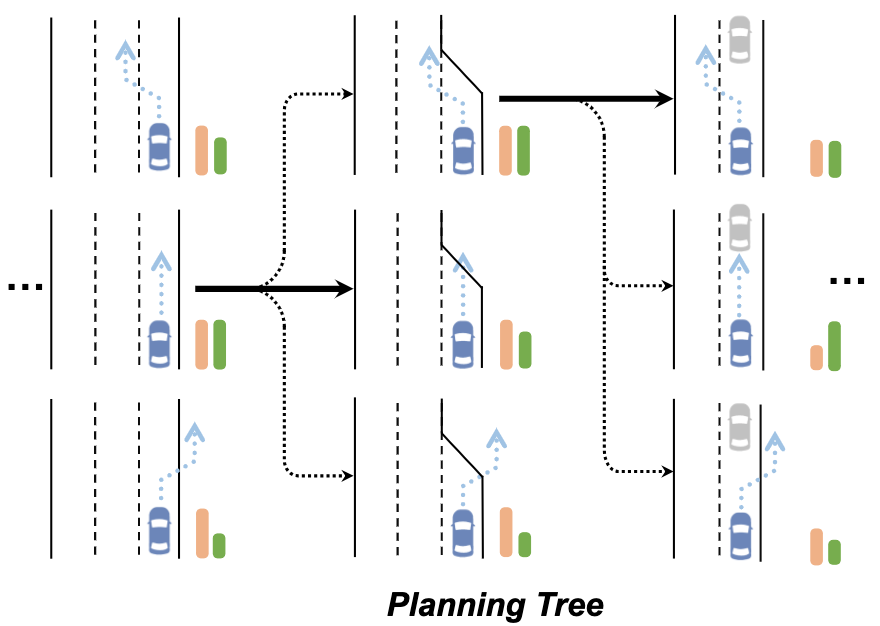

Driving into the Future: Multiview Visual Forecasting and Planning with World Model for Autonomous Driving

Yuqi Wang*, Jiawei He*,

Lue Fan*, Hongxin Li*, Yuntao Chen†, Zhaoxiang Zhang†

CVPR 2024

[

Paper][

Code][

Project Page]

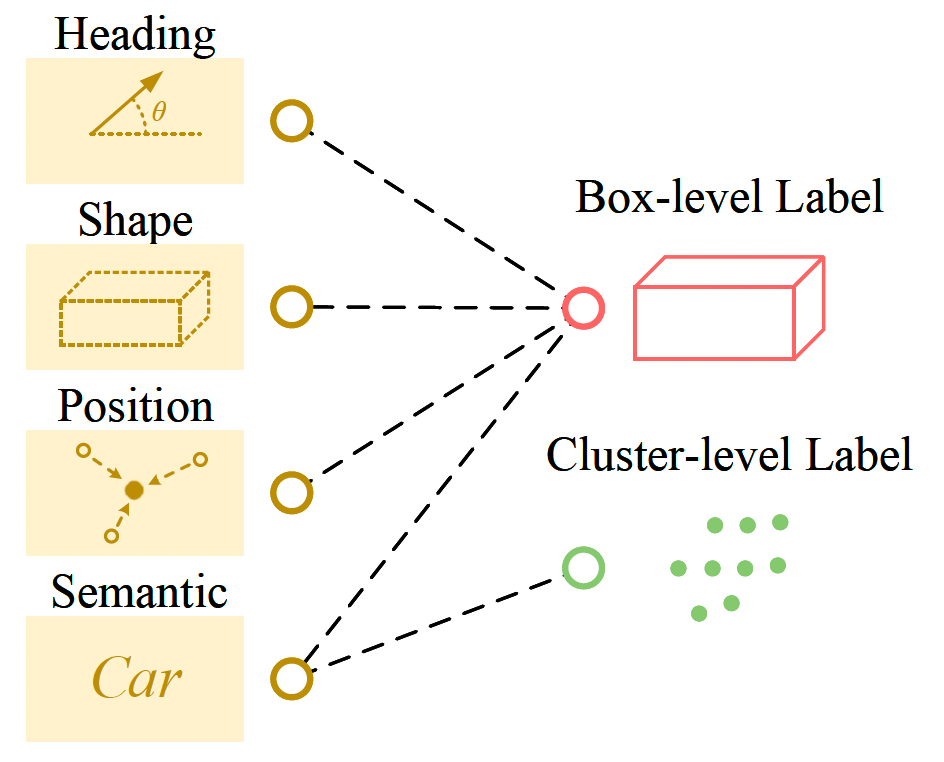

MixSup: Mixed-grained Supervision for Label-efficient LiDAR-based 3D Object Detection

Yuxue Yang,

Lue Fan†, Zhaoxiang Zhang†

ICLR 2024

[

Paper][

Code]

2023

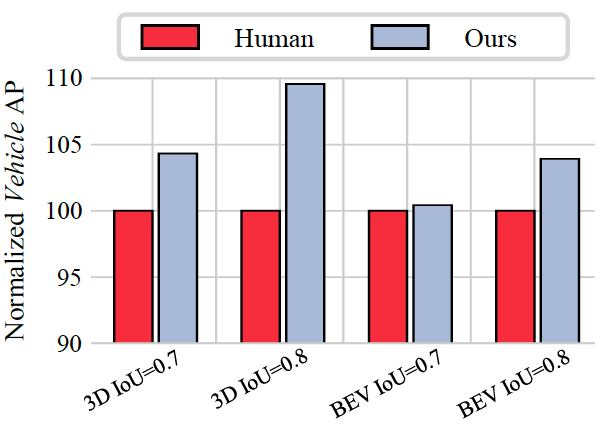

Once Detected, Never Lost: Surpassing Human Performance in Offline LiDAR based 3D Object Detection (CTRL)

Lue Fan, Yuxue Yang, Yiming Mao, Feng Wang, Yuntao Chen, Naiyan Wang, Zhaoxiang Zhang

ICCV 2023 (Oral)

[

Paper][

Code]

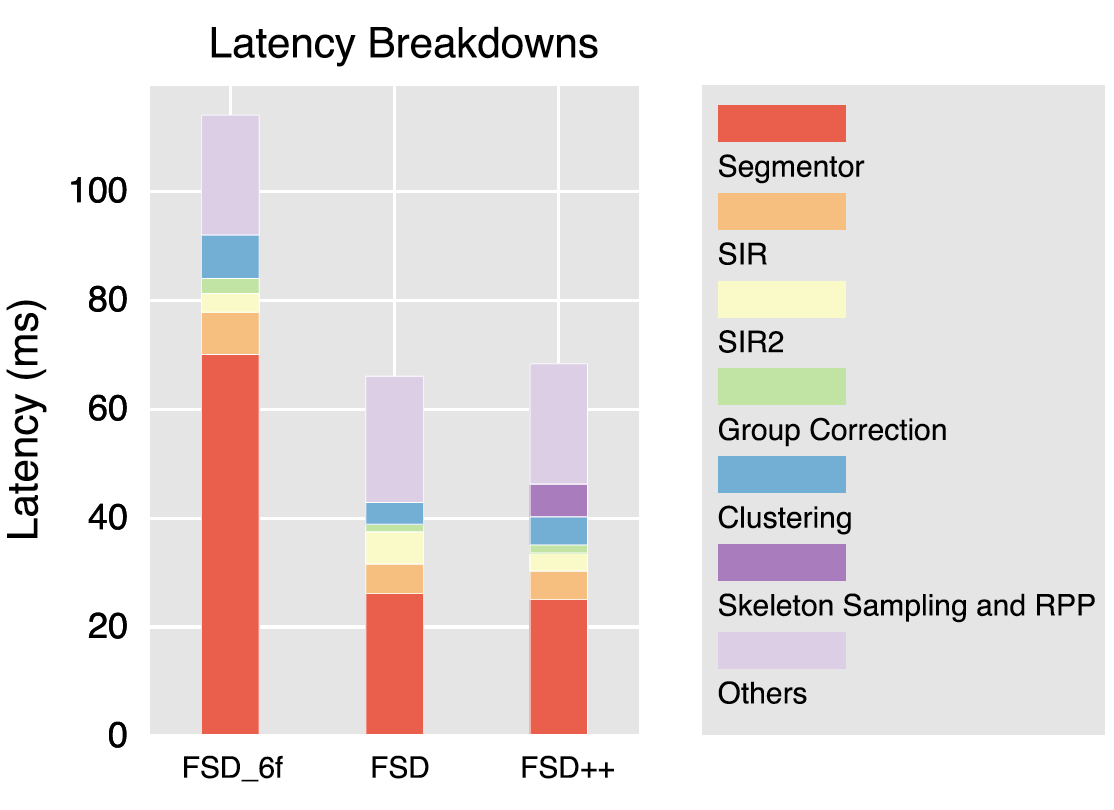

Super Sparse 3D Object Detection (FSD++)

Lue Fan, Yuxue Yang, Feng Wang, Naiyan Wang, Zhaoxiang Zhang

TPAMI 2023

[

Paper][

Code]

2022

Fully Sparse 3D Object Detection (FSD)

Lue Fan, Feng Wang, Naiyan Wang, Zhaoxiang Zhang

NeurIPS 2022

[

Paper][

Code]

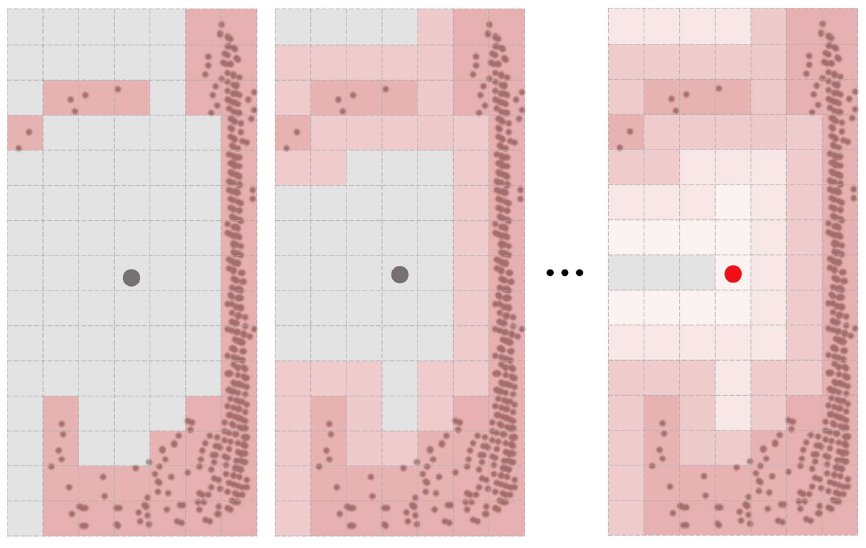

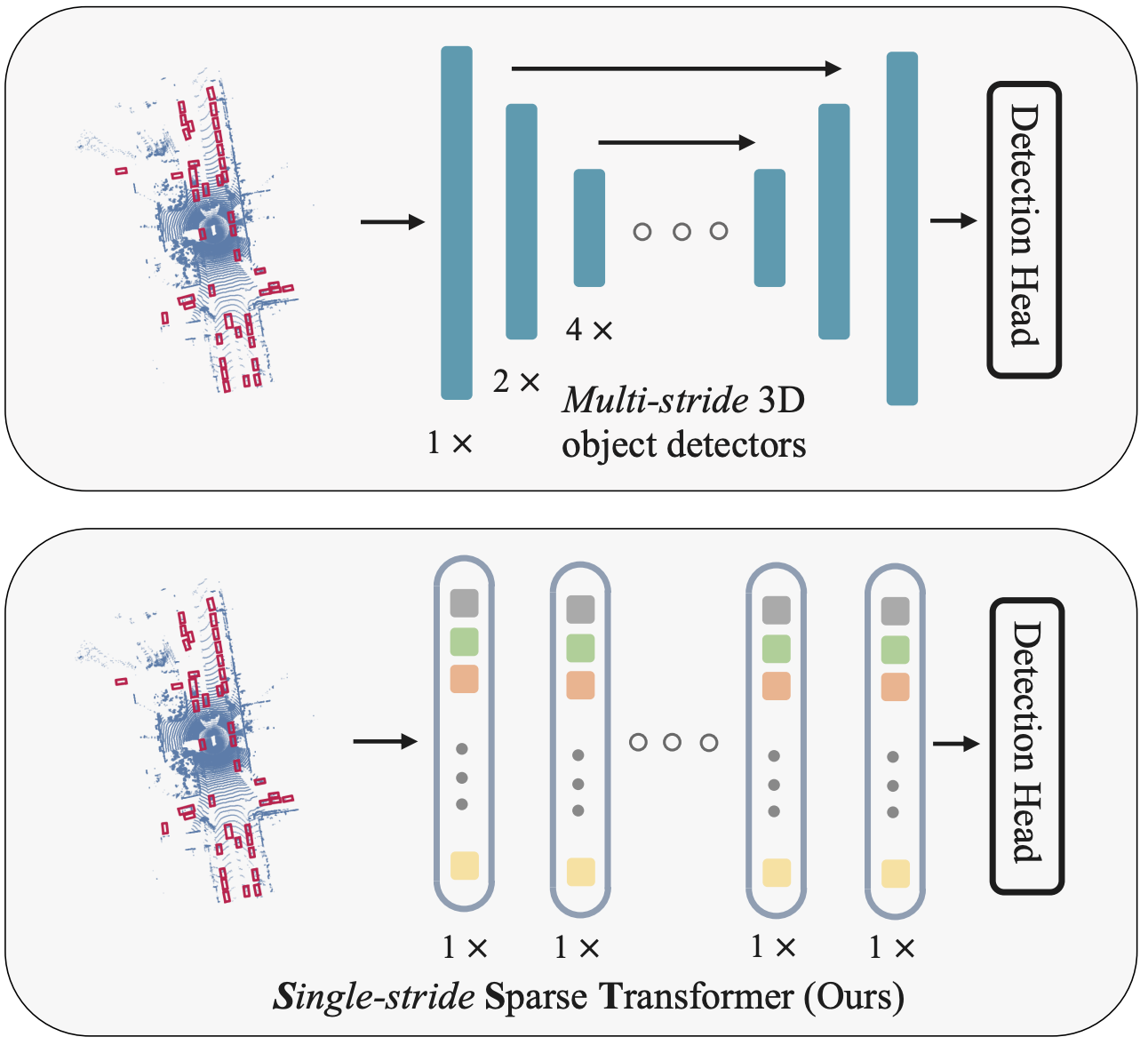

Embracing Single Stride 3D Object Detector with Sparse Transformer (SST)

Lue Fan, Ziqi Pang, Tianyuan Zhang, Yu-Xiong Wang, Hang Zhao, Feng Wang, Naiyan Wang, Zhaoxiang Zhang

CVPR 2022

[

Paper][

Code]

2021

RangeDet: In Defense of Range View for Lidar-based 3D Object Detection

Lue Fan*, Xuan Xiong*, Feng Wang, Naiyan Wang, Zhaoxiang Zhang

ICCV 2021

[

Paper][

Code]